Publications

Methodological developments on the challenge dataset from the organisers (we did not participate in the challenge):

|

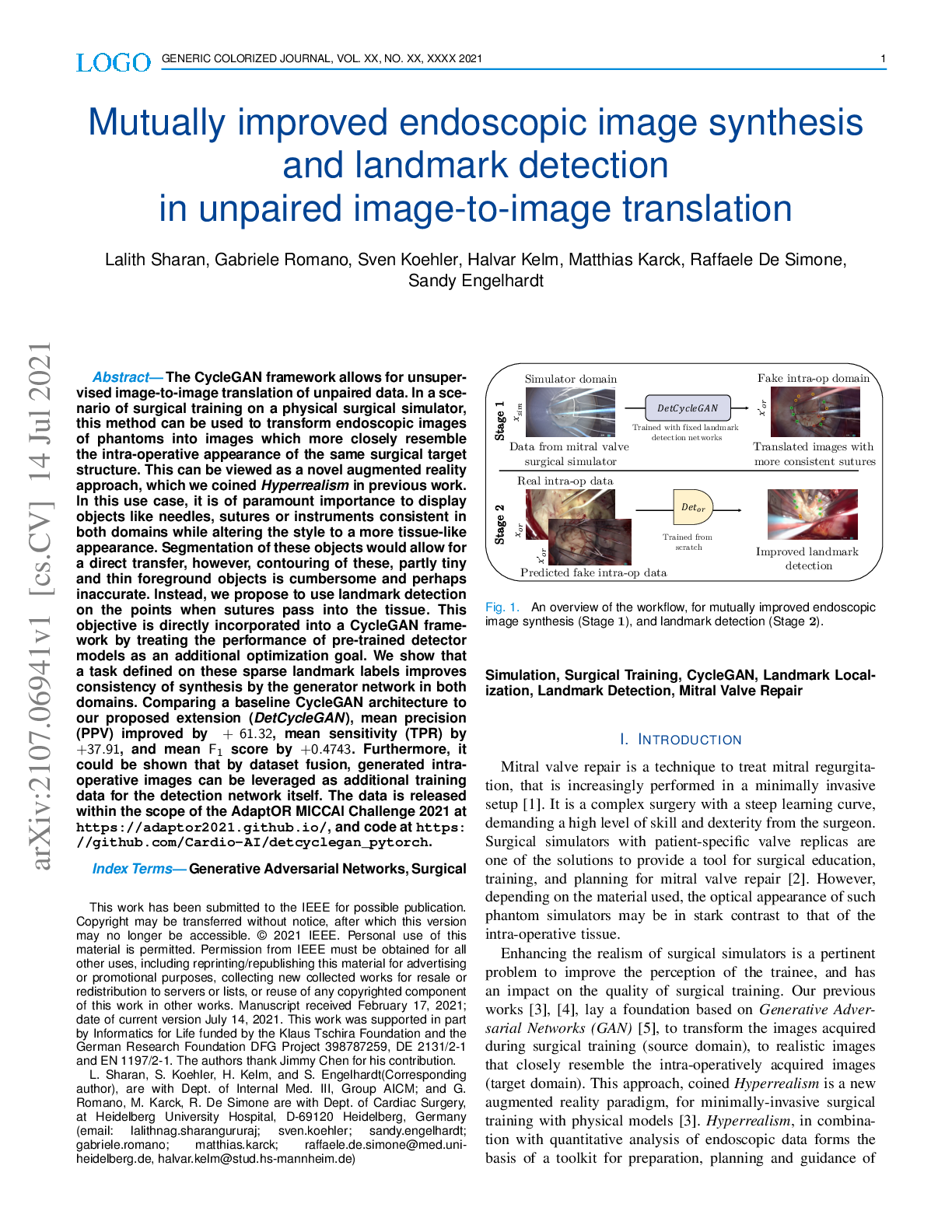

Mutually improved endoscopic image synthesis and landmark detection in unpaired image-to-image translation14 Jul 2021 Lalith Sharan, Gabriele Romano, Sven Koehler, Halvar Kelm, Matthias Karck, Raffaele De Simone, Sandy Engelhardt Abstract:

The CycleGAN framework allows for unsupervised image-to-image translation of unpaired data. In a

scenario of

surgical training on a physical surgical simulator, this method can be used to transform

endoscopic images of

phantoms into images which more closely resemble the intra-operative appearance of the same

surgical target

structure. This can be viewed as a novel augmented reality approach, which we coined

Hyperrealism in previous

work. In this use case, it is of paramount importance to display objects like needles, sutures

or instruments

consistent in both domains while altering the style to a more tissue-like appearance.

Segmentation of these

objects would allow for a direct transfer, however, contouring of these, partly tiny and thin

foreground objects

is cumbersome and perhaps inaccurate. Instead, we propose to use landmark detection on the

points when sutures

pass into the tissue. This objective is directly incorporated into a CycleGAN framework by

treating the

performance of pre-trained detector models as an additional optimization goal. We show that a

task defined on

these sparse landmark labels improves consistency of synthesis by the generator network in both

domains. Comparing

a baseline CycleGAN architecture to our proposed extension (DetCycleGAN), mean precision (PPV)

improved by +61.32,

mean sensitivity (TPR) by +37.91, and mean F1 score by +0.4743. Furthermore, it could be shown

that by dataset

fusion, generated intra-operative images can be leveraged as additional training data for the

detection network

itself. The data is released within the scope of the AdaptOR MICCAI Challenge 2021 at https://adaptor2021.github.io/, and code at https://github.com/Cardio-AI/detcyclegan_pytorch. DOI: 10.1109/JBHI.2021.3099858. The link to the IEEE Early access article can be found here: https://ieeexplore.ieee.org/document/9496194 , and the link to the preprint can be found here:https://arxiv.org/abs/2107.06941

|

Bibtex citation: |

|

|

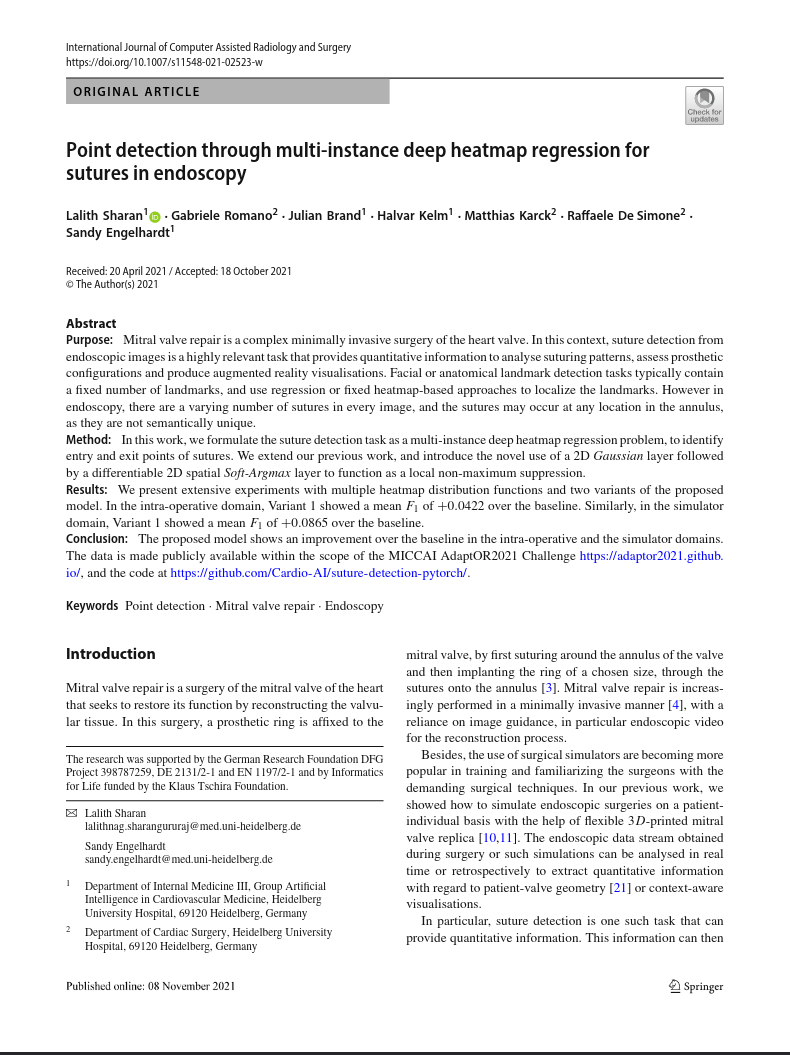

Point detection through multi-instance deep heatmap regression for sutures in endoscopy08 Nov 2021 Lalith Sharan, Gabriele Romano, Julian Brand, Halvar Kelm, Matthias Karck, Raffaele De Simone, Sandy Engelhardt Abstract:

Purpose:

Mitral valve repair is a complex minimally invasive surgery of the heart valve. In this context,

suture detection from endoscopic images is a highly relevant task that provides quantitative

information to analyse suturing patterns, assess prosthetic configurations and produce augmented

reality visualisations. Facial or anatomical landmark detection tasks typically contain a fixed

number of landmarks, and use regression or fixed heatmap-based approaches to localize the landmarks.

However in endoscopy, there are a varying number of sutures in every image, and the sutures may

occur at any location in the annulus, as they are not semantically unique.

Method:

In this work, we formulate the suture detection task as a multi-instance deep heatmap regression

problem, to identify entry and exit points of sutures. We extend our previous work, and

introduce the novel use of a 2D Gaussian layer followed by a differentiable 2D spatial Soft-Argmax

layer to function as a local non-maximum suppression.

Results:

We present extensive experiments with multiple heatmap distribution functions and two variants of

the proposed model. In the intra-operative domain, Variant 1 showed a mean F1

of +0.0422 over the baseline. Similarly, in the simulator domain, Variant 1 showed a mean F1

of +0.0865 over the baseline.

Conclusion:

The proposed model shows an improvement over the baseline in the intra-operative and the simulator

domains. The data is made publicly available within the scope of the MICCAI AdaptOR2021 Challenge

https://adaptor2021.github.io/, and the code at

https://github.com/Cardio-AI/suture-detection-pytorch/.

DOI:10.1007/s11548-021-02523-w. The link to the open access article can be found here:

https://link.springer.com/article/10.1007%2Fs11548-021-02523-w

|

Bibtex citation: |

|

Submissions from the participants:

Cross-Domain Landmarks Detection in Mitral Regurgitation (1st Prize)25 Sep 2021 Jiacheng Wang, Haojie Wang, Ruochen Mu, Liansheng Wang |

|

Bibtex citation: |

|

Improved Heatmap-Based Landmark Detection (2nd Prize)25 Sep 2021 Huifeng Yao, Ziyu Guo, Yatao Zhang, Liansheng Wang |

|

Bibtex citation: |

|